[Software] C.19 Software Testing – Component Level

Categories: Software

📋 This is my note-taking from what I learned in the class “Software Engineering Fundamentals - COMP 120-002”

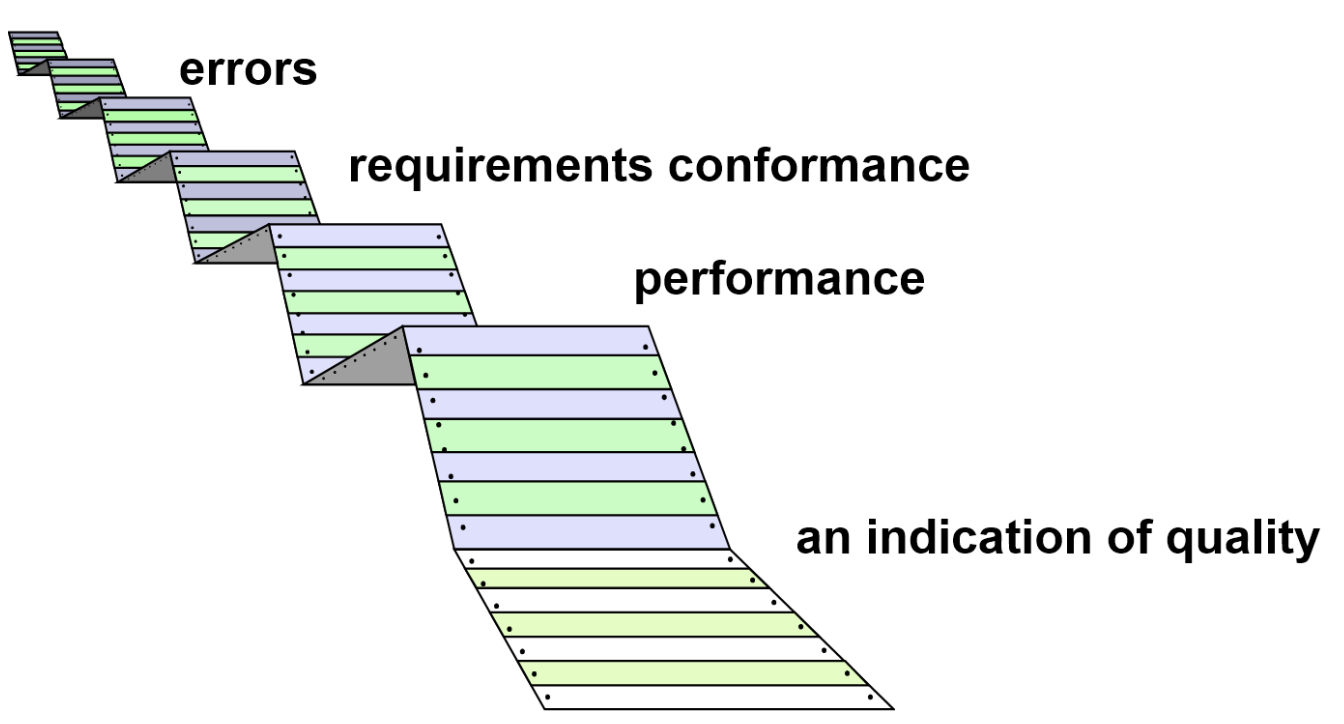

Software Testing Strategies

Testing is the process of exercising a program with the specific intent of finding errors prior to delivery to the end user. Testing is a set of activities that can be planned in advance and conducted systematically.

Testing Strategic Approach

- To perform effective testing, you should conduct effective technical reviews. By doing this, many errors will be eliminated before testing commences.

- Testing begins at the component level and works “outward” toward the integration of the entire computer-based system.

- Different testing techniques are appropriate for different software engineering approaches and at different points in time.

- Testing is conducted by the developer of the software and (for large projects) an independent test group.

- Testing and debugging are different activities, but debugging must be accommodated in any testing strategy.

Verification and Validation

-

- Verification: Refers to the set of tasks that ensure that software correctly implements a specific function.

- “Are we building the product right?”

-

- Validation: Refers to a different set of tasks that ensure that the software that has been built is traceable to customer requirements.

- “Are we building the right product?”

Organizing for Testing

- Software developers are always responsible for testing individual program components and ensuring that each performs its deigned function or behavior.

- Only after the software architecture is complete does an independent test group become involved.

- The role of an independent test group (ITG) is to remove the inherent problems associated with letting the builder test the thing that has been built.

- ITG personnel are paid to find errors.

- Developers and ITG work closely throughout a software project to ensure that thorough tests will be conducted.

Who Tests the Software?

- Developer: Understands the system but will test “gently” and is driven by “delivery”. They have a vested interest in demonstrating that the program is error-free, that it works according to customer requirements, and that it will be completed on schedule and within budget.

- Independent Tester: Must learn about the system, but will attempt to break it and is driven by quality.

Testing Strategy

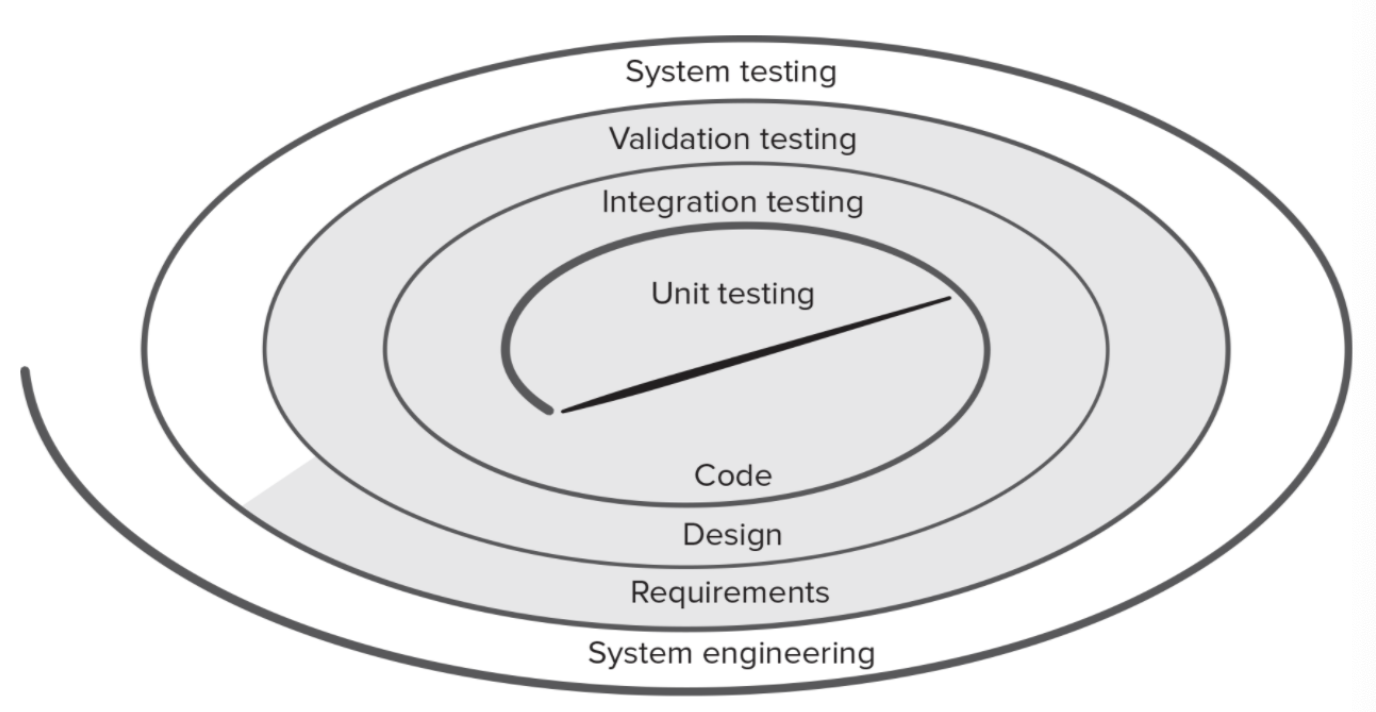

We begin by ‘testing-in-the-small’ and move toward ‘testing-in-the-large’.

Unit testing begins at the center of the spiral and concentrates on each unit (e.g., component, class, or WebApp content object) of the software as implemented in source code.

Testing progresses by moving outward along the spiral to integration testing, where the focus is on design and the construction of the software architecture.

Taking another turn outward on the spiral, you encounter validation testing, where requirements established as part of requirements modeling are validated against the software that has been constructed.

Finally, you arrive at system testing, where the software and other system elements are tested as a whole.

To test computer software, you spiral along streamlines that broaden the scope of testing with each turn.

For conventional software:

- The module (component) is our initial focus

- Integration of modules follows

For object-oriented software, our focus when “testing in the small” changes from an individual module (the conventional view) to an OO class that encompasses attributes and operations and implies communication and collaboration.

Testing the Big Picture

- Unit testing begins at the center of the spiral and concentrates on each unit (for example, component, class, or content object) as they are implemented in source code.

- Testing progresses to integration testing, where the focus is on design and the construction of the software architecture. Taking another turn outward on the spiral.

- Validation testing, is where requirements established as part of requirements modeling are validated against the software that has been constructed.

- In system testing, the software and other system elements are tested as a whole.

Software Testing Steps

When is Testing Done?

Criteria for Done

- You’re never done testing; the burden simply shifts from the software engineer to the end user. (Wrong).

- You’re done testing when you run out of time or you run out of money. (Wrong).

- The statistical quality assurance approach suggests executing tests derived from a statistical sample of all possible program executions by all targeted users.

- By collecting metrics during software testing and making use of existing statistical models, it is possible to develop meaningful guidelines for answering the question: “When are we done testing?”

Test Planning

- Specify product requirements in a quantifiable manner long before testing commences.

- State testing objectives explicitly.

- Understand the users of the software and develop a profile for each user category.

- Develop a testing plan that emphasizes “rapid cycle testing.”

- Build “robust” software that is designed to test itself.

- Use effective technical reviews as a filter prior to testing.

- Conduct technical reviews to assess the test strategy and test cases themselves.

- Develop a continuous improvement approach for the testing process.

Test Recordkeeping

Test cases can be recorded in Google Docs spreadsheet:

- Briefly describes the test case.

- Contains a pointer to the requirement being tested.

- Contains expected output from the test case data or the criteria for success.

- Indicate whether the test was passed or failed.

- Dates the test case was run.

- Should have room for comments about why a test may have failed (aids in debugging).

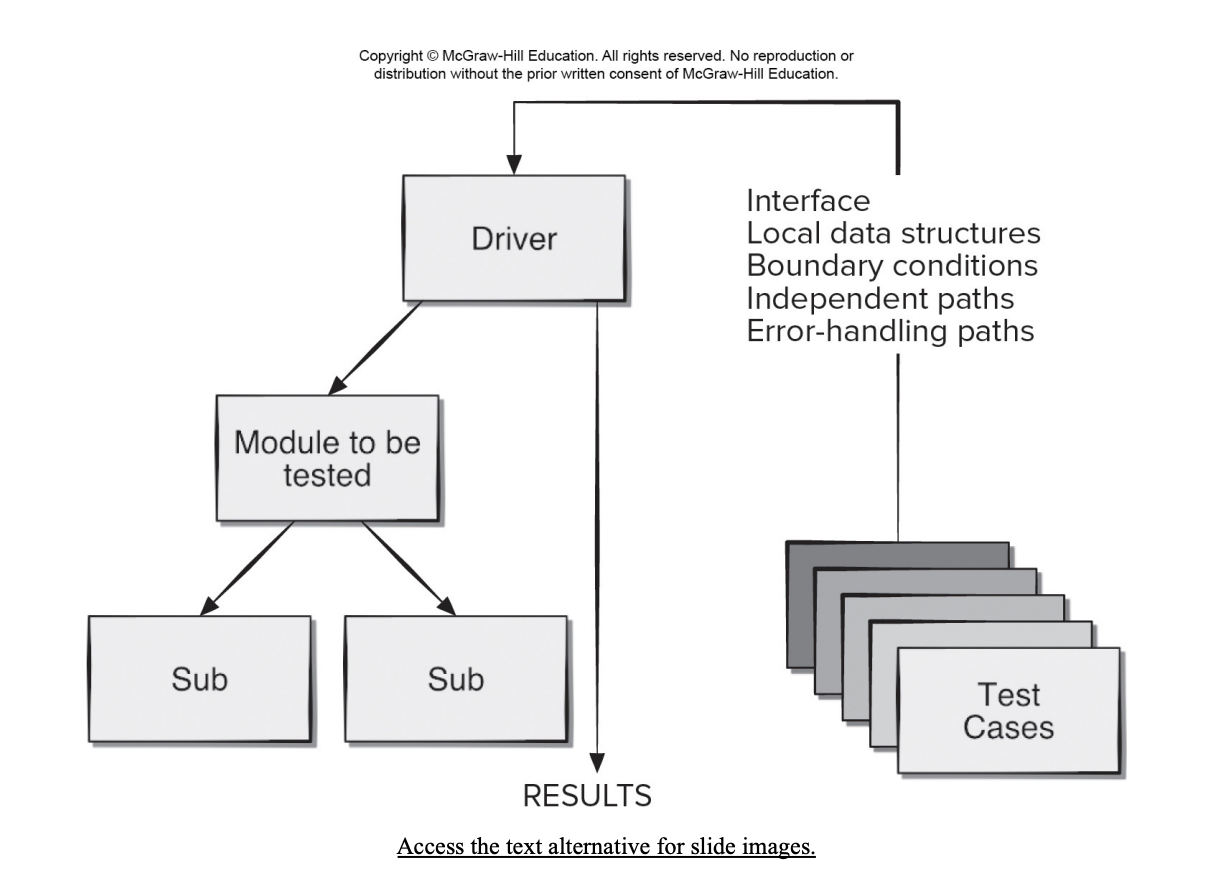

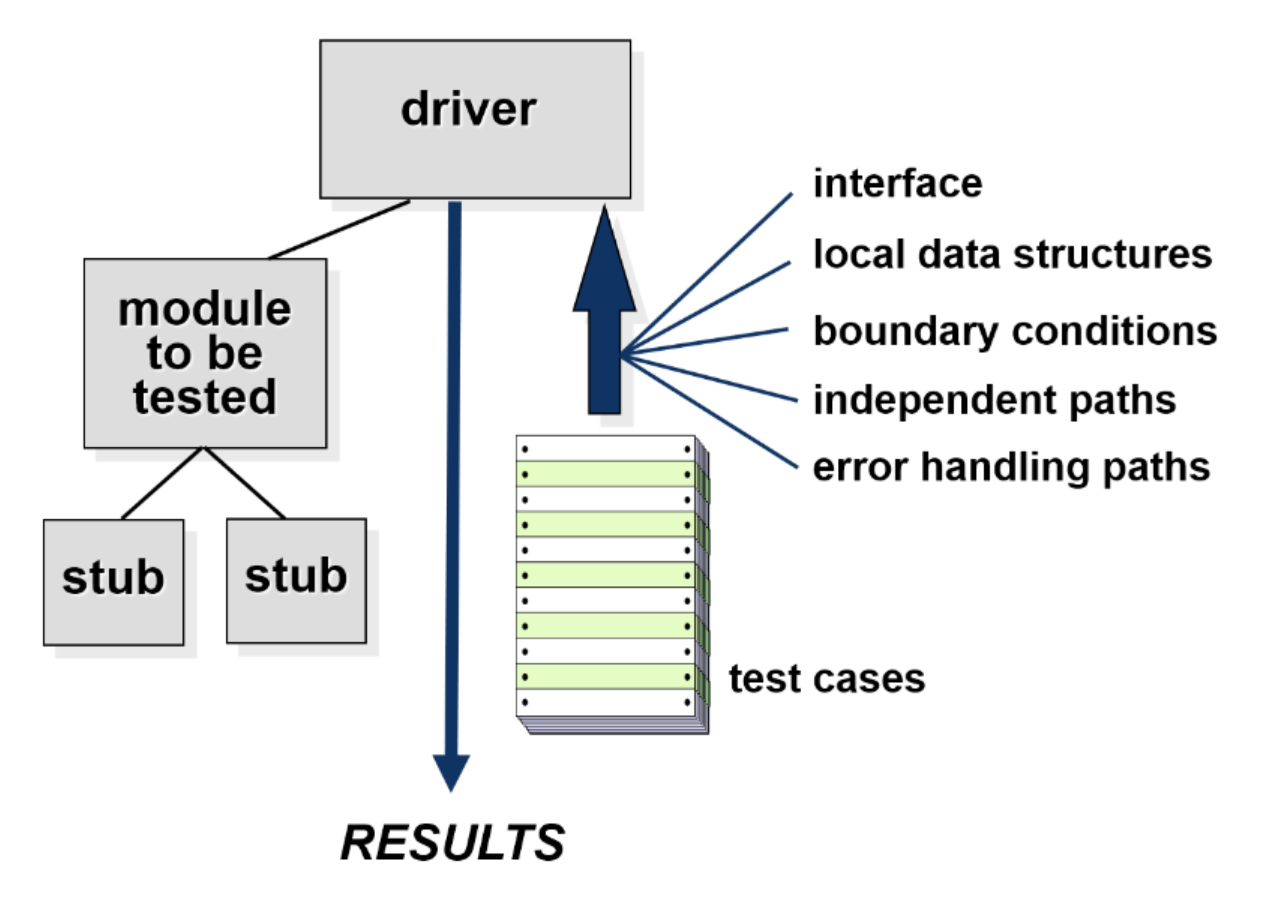

Role of Scaffolding

- Components are not stand-alone program some type of scaffolding is required to create a testing framework.

- As part of this framework, driver and/or stub software must often be developed for each unit test.

- A driver is nothing more than a “main program” that accepts test-case data, passes such data to the component (to be tested), and prints relevant results.

- Stubs (dummy subprogram) serve to replace modules invoked by the component to be tested.

- A stub uses the module’s interface, may do minimal data manipulation, prints verification of entry, and returns control to the module undergoing testing.

Unit Test Environment

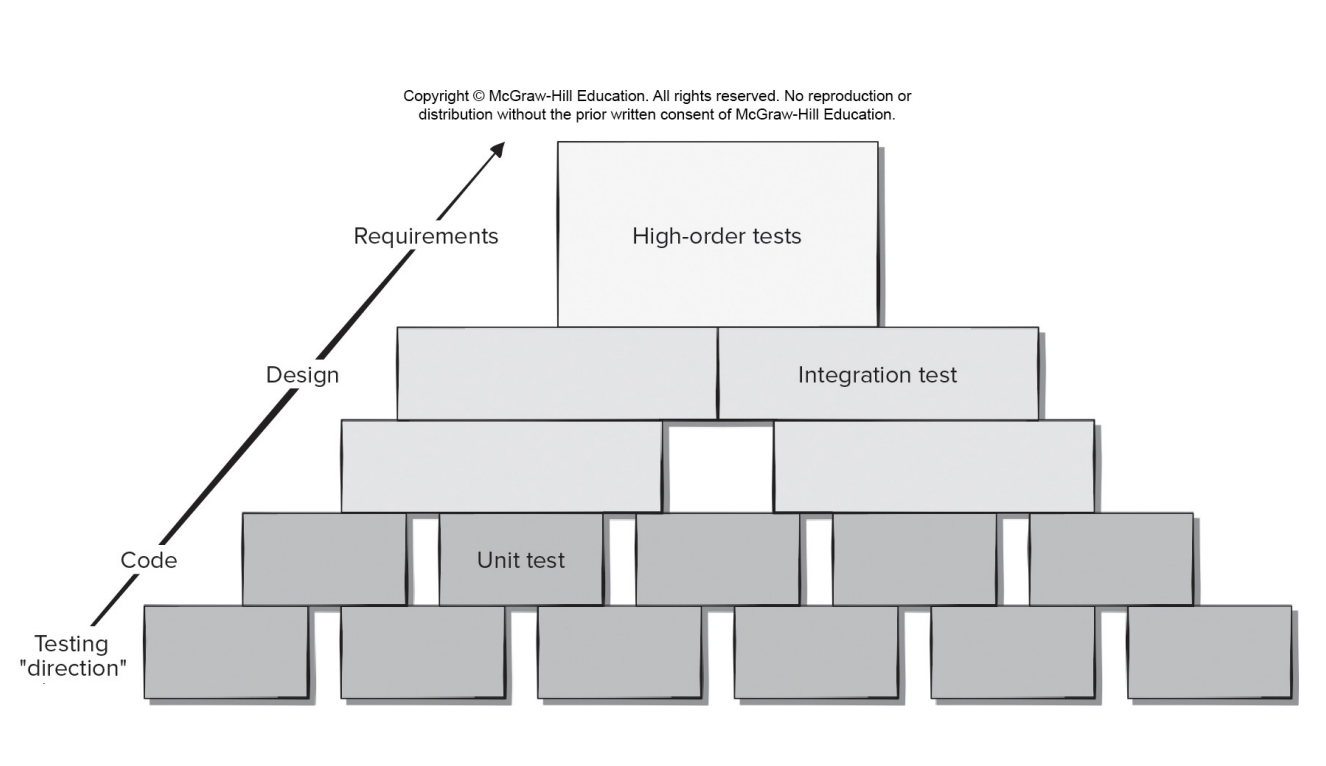

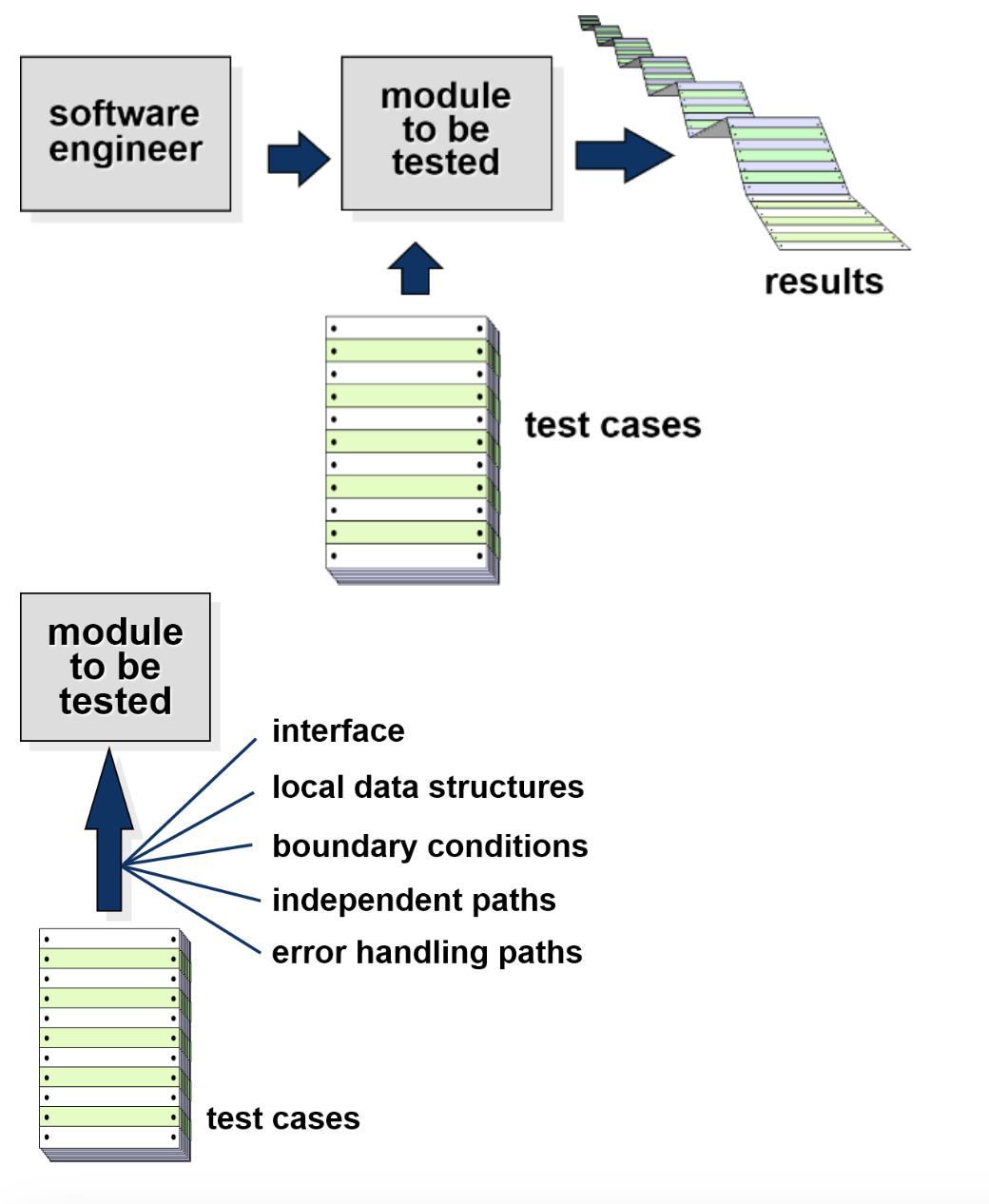

Unit Testing

Initially, tests focus on each component individually, ensuring that it functions properly as a unit.

Hence, the name unit testing. Unit testing makes heavy use of testing techniques that exercise specific paths in a component’s control structure to ensure complete coverage and maximum error detection.

Unit-Test Procedures

Because a component is not a stand-alone program, driver and/or stub software must often be developed for each unit test. In most applications a driver is nothing more than a “main program” that accepts test case data, passes such data to the component (to be tested), and prints relevant results. Stubs serve to replace modules that are subordinate (invoked by) the component to be tested. A stub or “dummy subprogram” uses the subordinate module’s interface, may do minimal data manipulation, prints verification of entry, and returns control to the module undergoing testing.

General Testing Criteria

The following criteria and corresponding tests are applied for all test phases:

- Interface integrity. Internal and external interfaces are tested as each module(or cluster) is incorporated into the structure.

- Functional validity. Tests designed to uncover functional errors are conducted.

- Information content. Tests designed to uncover errors associated with local or global data structures are conducted.

- Performance. Tests designed to verify performance bounds established during software design are conducted.

Cost Effective Testing

- Exhaustive testing requires every possible combination and ordering of input values be processed by the test component.

- The return on exhaustive testing is often not worth the effort, since testing alone cannot be used to prove a component is correctly implemented.

- Testers should work smarter and allocate their testing resources on modules crucial to the success of the project or those that are suspected to be error-prone as the focus of their unit testing.

MobileApp Testing

The strategy for testing mobile applications adopts the basic principles for all software testing. However, the unique nature of MobileApps demands the consideration of a number of specialized issues:

- User-experience testing. Users are involved early in the development process to ensure the app meets stakeholder usability and accessibility expectations on all supported devices.

- Device compatibility testing. Testers verify the app works correctly on multiple devices and software platforms.

- Performance testing. Testers check non-functional requirements unique to mobile devices (e.g. download times, processor speed, storage capacity, power availability).

- Connectivity testing. Testers check ability of app to connect reliably to any needed networks or web services.

- Security testing. Testers ensure app does not compromise the privacy or security requirements of its users.

- Testing-in-the-wild. The app is tested on actual user devices in a variety of realistic user environments.

- Certification testing. Testers ensure the app meets the distribution standards of the distributors.

High Order Testing

- Validation testing: Focus is on software requirements.

- System testing: Focus is on system integration.

- Alpha/Beta testing: Focus is on customer usage. The alpha test is conducted at the developer’s site by a representative group of end users. The beta test is conducted at one or more end-user sites. Unlike alpha testing, the developer generally is not present.

- Recovery testing: Forces the software to fail in a variety of ways and verifies that recovery is properly performed.

- Security testing: Verifies that protection mechanisms built into into a system will, in fact, protect it from illegal penetration.

- Stress testing: Executes a system in a manner that demands resources in abnormal quantity, frequency, or volume.

- Performance testing: Test the run-time performance of software within the context of an integrated system.

Test Case Design

Design unit test cases before you develop code for a component to ensure that code that will pass the tests.

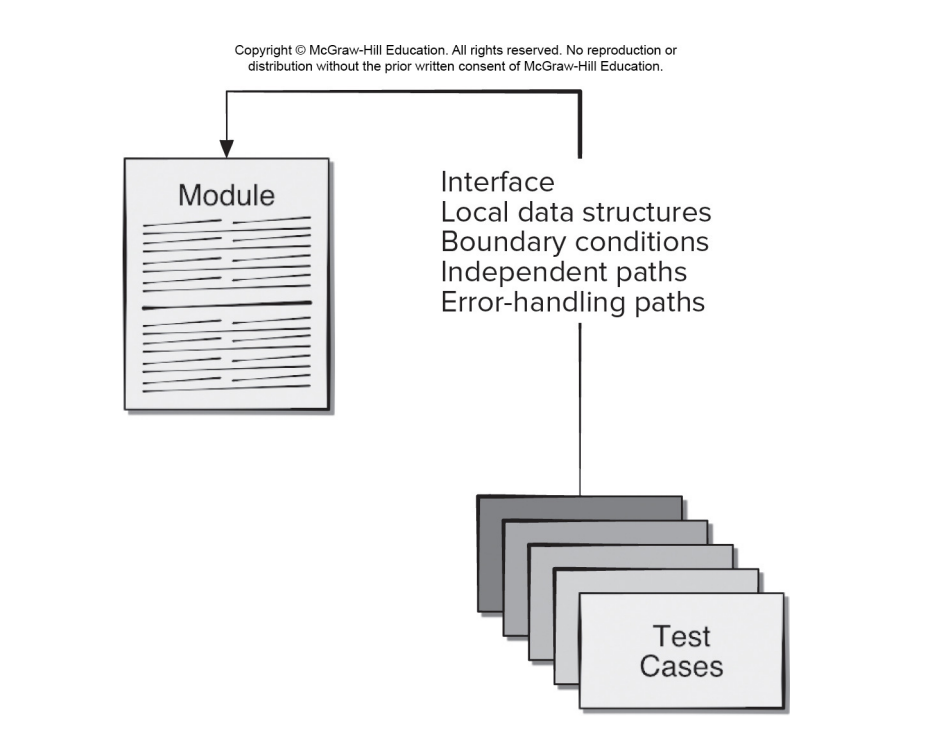

Test cases are designed to cover the following areas:

- The module interface is tested to ensure that information properly flows into and out of the program unit.

- Local data structures are examined to ensure that stored data stored maintains its integrity during execution.

- Independent paths through control structures are exercised to ensure all statements are executed at least once.

- Boundary conditions are tested to ensure module operates properly at boundaries established to limit or restrict processing.

- All error-handling paths are tested.

Module Tests

Error Handling

- A good design anticipates error conditions and establishes error- handling paths which must be tested.

- Among the potential errors that should be tested when error handling is evaluated are:

- Error description is unintelligible.

- Error noted does not correspond to error encountered.

- Error condition causes system intervention prior to error handling,

- Exception-condition processing is incorrect.

- Error description does not provide enough information to assist in the location of the cause of the error.

Traceability

- To ensure that the testing process is auditable, each test case needs to be traceable back to specific functional or nonfunctional requirements or anti-requirements.

- Often nonfunctional requirements need to be traceable to specific business or architectural requirements.

- Many test process failures can be traced to missing traceability paths, inconsistent test data, or incomplete test coverage.

- Regression testing requires retesting selected components that may be affected by changes made to other collaborating software components.

White-box Testing

White-box testing, sometimes called glass-box or structural testing, is a test-case design philosophy that uses the control structure described as part of component-level design to derive test cases.

Using white-box testing methods, you can create test cases that:

- Guarantee all independent paths in a module have been exercised at least once.

- Exercise all logical decisions on their true and false sides.

- Execute all loops at their boundaries and within their operation bounds.

- Exercise internal data structures to ensure their validity.

White-box Testing - 1. Basis Path Testing

The basis path method enables the test-case designer to derive a logical complexity measure of a procedural design and use this measure as a guide for defining a basis set of execution paths. Test cases derived to exercise the basis set are guaranteed to execute every statement in the program at least once during testing.

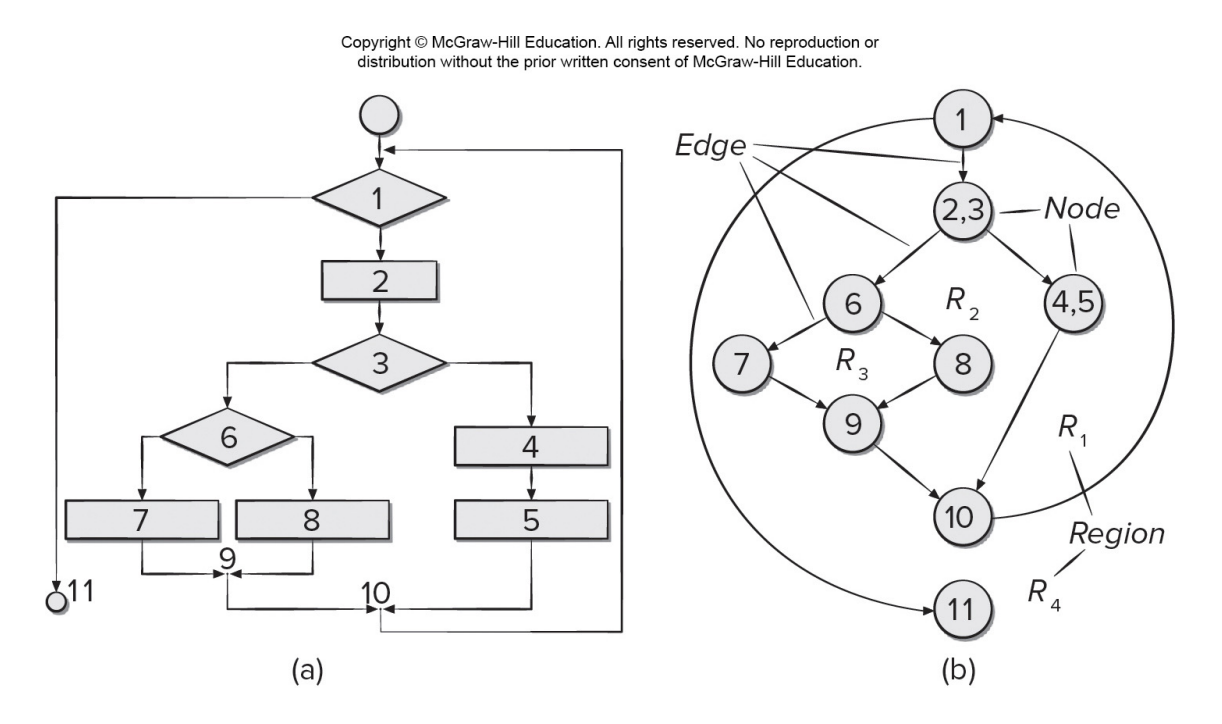

Flowchart (a) and Flow Graph (b)

White-box Testing - 2. Control Structure Testing

The basis path testing technique is one of several techniques for control structure testing. Other techniques are needed to broaden testing coverage to supplement basis path testing and improve the quality of white-box testing.

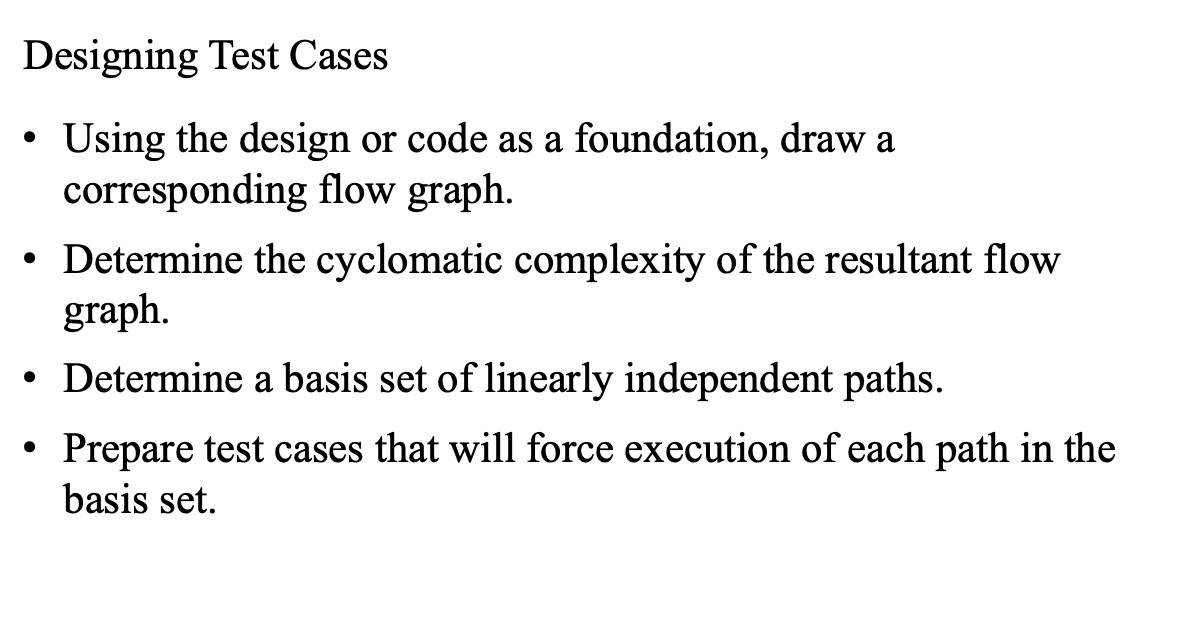

Condition testing is a test-case design method that exercises the logical conditions contained in a program module. Data flow testing selects test paths of a program according to the locations of definitions and uses of variables in the program. Loop testing is a technique that focuses exclusively on the validity of loop constructs, which includes simple and nested loops.

- Condition testing is a test-case design method that exercises the logical conditions contained in a program module.

- Data flow testing selects test paths of a program according to the locations of definitions and uses of variables in the program.

- Loop testing is a white-box testing technique that focuses exclusively on the validity of loop constructs.

White-box Testing - 3. Loop Testing

Classes of Loops

| Test cases for simple loops: | Test cases for nested loops: |

|---|---|

| 1. Skip the loop entirely. | 1. Start at the innermost loop. Set all other loops to minimum values. |

| 2. Only one pass through the loop. | Conduct simple loop tests for the innermost loop while holding the outer loops at their minimum iteration parameter (for example, loop counter) values. |

| 3. Two passes through the loop. | 3. Add other tests for out-of-range or excluded values. |

| 4. m passes through the loop where m < n. | 4. Work outward, conducting tests for the next loop, but keeping all other outer loops at minimum values and other nested loops to “typical” values. |

| 5. n − 1, n, n + 1 passes through the loop. | 5. Continue until all loops have been tested. |

Black Box Testing

Black-box (functional) testing attempts to find errors in the following categories:

- Incorrect or missing functions.

- Interface errors.

- Errors in data structures or external database access.

- Behavior or performance errors.

- Initialization and termination errors.

Unlike white-box testing, which is performed early in the testing process, black-box testing tends to be applied during later stages of testing.

Black-box test cases are created to answer questions like:

- How is functional validity tested?

- How are system behavior and performance tested?

- What classes of input will make good test cases?

- Is the system particularly sensitive to certain input values?

- How are the boundaries of a data class isolated?

- What data rates and data volume can the system tolerate?

- What effect will specific combinations of data have on system operation?

Black Box – 1. Interface Testing

- Interface testing is used to check that a program component accepts information passed to it in the proper order and data types and returns information in proper order and data format.

- Components are not stand-alone programs testing interfaces requires the use stubs and drivers.

- Stubs and drivers sometimes incorporate test cases to be passed to the component or accessed by the component.

- Debugging code may need to be inserted inside the component to check that data passed was received correctly.

Black Box – 2. Object-Oriented Testing (OOT)

To adequately test OO systems, three things must be done:

- The definition of testing must be broadened to include error discovery techniques applied to object-oriented analysis and design models.

- The strategy for unit and integration testing must change significantly.

- The design of test cases must account for the unique characteristics of OO software.

OOT - Class Testing

- Class testing for object-oriented (OO) software is the equivalent of unit testing for conventional software.

- Unlike unit testing of conventional software, which tends to focus on the algorithmic detail of a module and the data that flow across the module interface.

- Class testing for OO software is driven by the operations encapsulated by the class and the state behavior of the class.

- Valid sequences of operations and their permutations are used to test that class behaviors - equivalence partitioning can reduce number sequences needed,

OOT - Behavior Testing

- A state diagram can be used to help derive a sequence of tests that will exercise dynamic behavior of the class.

- Tests to be designed should achieve full coverage by using operation sequences cause transitions through all allowable states.

- When class behavior results in a collaboration with several classes, multiple state diagrams can be used to track system behavioral flow.

- A state model can be traversed in a breadth-first manner by having test case exercise a single transition and when a new transition is to be tested only previously tested transitions are used.

Black Box – 3. Boundary Value Analysis (BVA)

- Boundary value analysis leads to a selection of test cases that exercise bounding values.

- Guidelines for BVA:

- If an input condition specifies a range bounded by values a and b, test cases should be designed with values a and b and just above and just below a and b.

- If an input condition specifies a number of values, test cases should be developed that exercise the min and max numbers as well as values just above and below min and max.

- Apply guidelines 1 and 2 to output conditions.

- If internal program data structures have prescribed boundaries (for example, array with max index of 100) be certain to design a test case to exercise the data structure at its boundary.

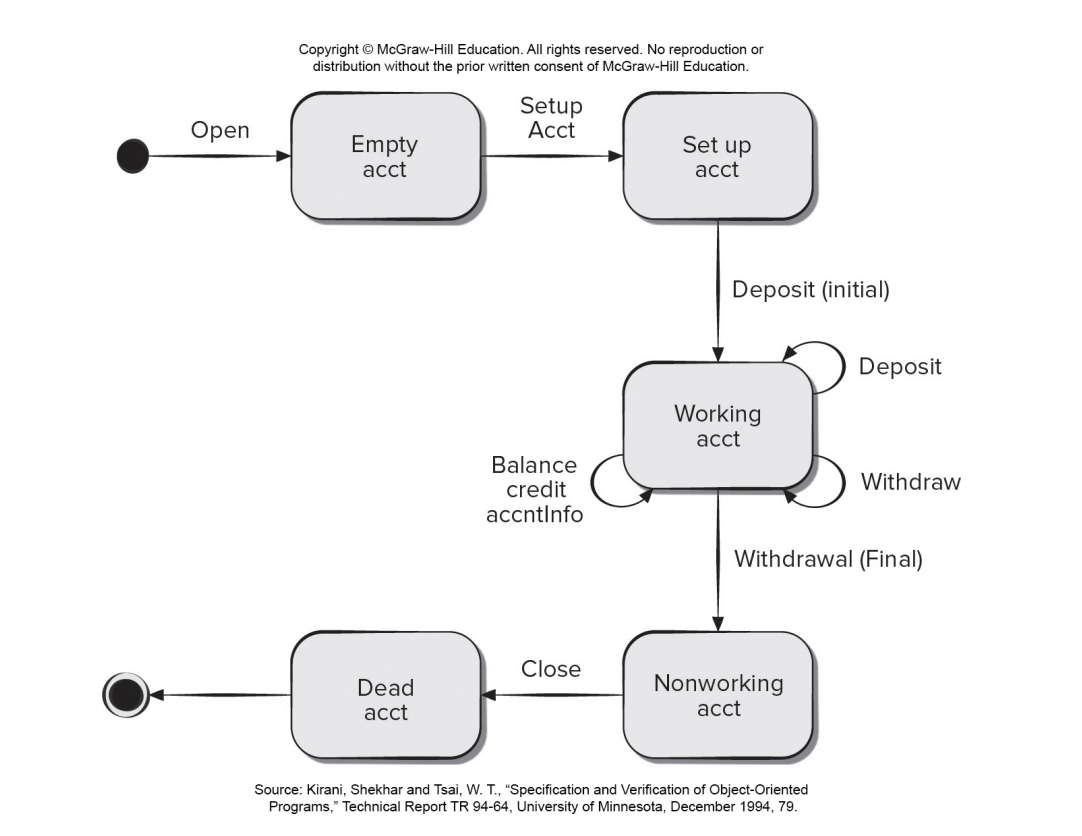

State Diagram for Account Class

White-box and black-box testing statements

Complete these statements on white-box and black-box testing and their techniques by dragging the word box to the correct space.

-

Black-box testing techniques enable you to derive sets of input conditions that will fully exercise all functional requirements for a program.

-

White-box testing is a design philosophy that uses the control structure to derive test cases.

-

Basis path testing is a white-box testing technique that enables the test-case designer tp define a basis set of execution paths.

-

Equivalence partitioning is a black-box testing method that divides the input domain of a program into classes of data.

-

Data flow testing selects test paths of a program according to the locations of definitions and uses of variables in a program.

-

Condition testing is a test-case design method that exercises the logical conditions in a program module.

-

Loop testing is a testing technique that focuses exclusively on the validity of loop constructs.

-

These are all techniques for Control structure testing.

-

Boundary value analysis is a test-case design technique that leads to the selection of test cases at the ‘edges’ of a class.

-

Interface testing checks that the program component accepts information in the proper order and data types and returns information the proper order and data format.

-

These are all techniques used in black-box testing, also called Functional testing.

Final Thoughts

- Think – before you act to correct.

- Use tools to gain additional insight.

- If you’re at an impasse, get help from someone else.

- Once you correct the bug, use regression testing to uncover any side effects.

Text Book

Chapter 19. Software Testing - Component Level

This content discusses software component testing and the importance of having a flexible but well-planned testing strategy. The responsibility for component testing lies with individual software engineers, but the strategy is developed by the project manager, software engineers, and testing specialists. Testing should be conducted systematically to uncover errors in data and processing logic. The testing work product is a test specification, which outlines the overall testing strategy and specific testing steps. An effective test plan and procedure will lead to the discovery of errors at each stage of the construction process.

19.1 A strategic approach to software testing

This content discusses the importance of having a defined template for software testing, which includes conducting technical reviews, beginning testing at the component level and working outward, using different testing techniques for different software engineering approaches, involving both developers and independent test groups in testing, and accommodating debugging within the testing strategy. The testing strategy should incorporate a set of tactics for low-level and high-level tests, provide guidance for practitioners and milestones for managers, and enable measurable progress and early problem detection.

19.1.1 Verification and Validation

Verification and validation (V&V) is a broader topic that includes software testing and other software quality assurance activities such as technical reviews, audits, monitoring, simulation, feasibility study, documentation review, algorithm analysis, and more. Verification ensures that the software correctly implements a specific function, while validation ensures that the software built is traceable to customer requirements. Testing plays an important role in V&V, but it cannot be viewed as a safety net for quality. Quality should be incorporated into software throughout the software engineering process, and testing confirms quality that has been built into the software.

19.1.2 Organizing for Software Testing

The process of software testing is complicated by the inherent conflict of interest that occurs when developers test their own software. Developers have a vested interest in demonstrating that the software is error-free, meets customer requirements, and is completed on schedule and within budget. However, thorough testing requires finding errors, which is not in the developers’ interest. Independent testing removes this conflict of interest and helps ensure thorough testing. Developers are still responsible for testing individual components and conducting integration testing. The independent test group (ITG) works closely with developers throughout the software development project, planning and specifying test procedures, and reporting to the software quality assurance organization.

19.1.3 The Big Picture

The software process is represented as a spiral that begins with system engineering, followed by software requirements analysis, design, and coding. As you move inward along the spiral, the level of abstraction decreases with each turn. The spiral illustrates the iterative nature of the software development process.

The process of software testing can be viewed as a spiral that starts with unit testing and progresses outward to integration testing, validation testing, and system testing. Testing in software engineering is a sequential series of steps, with unit testing focusing on individual components, integration testing addressing the issues of verification and program construction, and validation testing providing final assurance that the software meets all functional, behavioral, and performance requirements. Testing techniques range from specific path exercises to input and output focus to ensure coverage and maximum error detection.

After the software has been validated through integration and validation testing, it must be combined with other system elements (such as hardware, people, and databases) to form a complete computer system. System testing verifies that all elements of the system work together properly to achieve the desired system function and performance. This step falls within the broader context of computer system engineering.

19.1.4 Criteria for “Done”

The question of when software testing is complete is a classic one with no definitive answer. One response is that testing is never truly finished, as users will continue to test the software through its use. Another response is that testing ends when time and budget constraints are met. However, more rigorous criteria are needed, and statistical quality assurance techniques can provide guidance. By collecting metrics during testing and using statistical models, it is possible to determine when sufficient testing has been conducted.

19.2 Planning and Recordkeeping

Software testing strategies range from waiting until the system is fully constructed and testing the overall system to conducting tests on a daily basis whenever any part of the system is constructed. The recommended strategy is incremental testing, which begins with testing individual program units, moves to tests designed to facilitate integration of the units, and culminates with tests that exercise the constructed system. Unit testing focuses on the smallest unit of software design, testing important control paths to uncover errors within the boundary of the module. A successful testing strategy requires specifying product requirements in a quantifiable manner, stating testing objectives explicitly, understanding the users of the software, developing a testing plan emphasizing “rapid cycle testing,” building “robust” software that is designed to test itself, using effective technical reviews as a filter prior to testing, conducting technical reviews to assess the test strategy and test cases themselves, and developing a continuous improvement approach for the testing process. Agile software testing also requires a test plan established before the first sprint meeting, test cases and directions reviewed by stakeholders as the code is developed, and testing results shared with all team members. Test recordkeeping can be done in online documents, such as a Google Docs spreadsheet, that contain a brief description of the test case, a pointer to the requirement being tested, expected output, indication of whether the test was passed or failed, dates the test case was run, and comments about why a test may have failed to aid in debugging.

19.2.1 Role of Scaffolding

Component testing is a step in software testing that focuses on verifying the smallest unit of software design, which is the component or module. Unit tests can be designed before or after the source code is generated. To establish test cases, design information is reviewed and expected results are identified. A driver and/or stub software is required to create a testing framework for each unit test. The driver is a “main program” that accepts test-case data, passes it to the component being tested, and prints relevant results. Stubs replace subordinate modules invoked by the component being tested, use the subordinate module’s interface, may do minimal data manipulation, print verification of entry, and return control to the module being tested.

Drivers and stubs are used in software testing as testing overhead. They are software components that must be coded but are not included in the final software product. If drivers and stubs are kept simple, the actual overhead is low. However, in cases where components cannot be adequately tested with simple scaffolding software, complete testing is postponed until the integration test step, where drivers or stubs are also used.

19.2.2 Cost-Effective Testing

Exhaustive testing, which requires testing every possible combination of input values and test-case orderings, is often not worth the effort and cannot prove a component is correctly implemented. In cases where resources are limited, testers should focus on selecting crucial modules and those that are suspected to be error-prone. Techniques for minimizing the number of test cases required for effective testing are discussed in Sections 19.4 through 19.6.

Exhaustive Testing

The content describes a 100-line C program that has nested loops and if-then-else constructs, resulting in 10^14 possible paths. It highlights the impracticality of exhaustive testing using a hypothetical magic test processor that would take 3170 years to test the program 24 hours a day, 365 days a year. The conclusion is that exhaustive testing is impossible for large software systems.

19.3 Test-Case Design

This content emphasizes the importance of unit testing and designing test cases before developing code for a component. Unit testing involves testing the smallest unit of software design, the software component or module, and focuses on important control paths to uncover errors within the boundary of the module. The article recommends an incremental testing strategy that begins with testing individual program units and culminates with tests that exercise the constructed system as it evolves. It also outlines the principles necessary for a software testing strategy to succeed, such as specifying product requirements in a quantifiable manner, understanding the users of the software, conducting technical reviews, and developing a continuous improvement approach. The article further suggests that it is a good idea to design unit test cases before developing code for a component to ensure that the code passes the tests. Finally, the article describes the different aspects that need to be tested during unit testing, such as the module interface, local data structures, independent paths through the control structure, boundary conditions, and error-handling paths.

This content discusses the importance of unit testing in software development and highlights key considerations in designing effective unit tests. It emphasizes the need to test data flow, local data structures, execution paths, and boundary conditions to uncover errors. The importance of testing error-handling paths is also emphasized, including the need to test every error-handling path and anticipate potential errors such as unintelligible error descriptions or incorrect exception-condition processing.

19.3.1 Requirements and Use Cases

The systematic creation of test cases based on use cases and analysis models can ensure good test coverage for functional requirements, while customer acceptance statements in user stories can provide the basis for writing test cases for nonfunctional requirements. Testing nonfunctional requirements may require specialized testing techniques. The primary purpose of testing is to help developers discover unknown defects, so it’s important to write test cases that exercise the error-handling capabilities of a component and test that it doesn’t do things it’s not supposed to do, stated formally as anti-requirements. These negative test cases should be included to ensure the component behaves according to the customer’s expectations.

19.3.2 Traceability

To ensure an auditable testing process, each test case must be traceable back to specific functional or nonfunctional requirements or anti-requirements. Agile developers may resist traceability, but many test process failures can be traced to missing traceability paths, inconsistent test data, or incomplete test coverage. Regression testing requires retesting selected components that may be affected by changes made to other software components. Making sure that test cases are traceable to requirements is an important step in component testing.

19.4 White-Box Testing

White-box testing is a test-case design philosophy that uses the control structure described as part of component-level design to derive test cases. White-box testing methods can derive test cases that guarantee all independent paths within a module have been exercised, exercise all logical decisions, execute all loops, and exercise internal data structures.

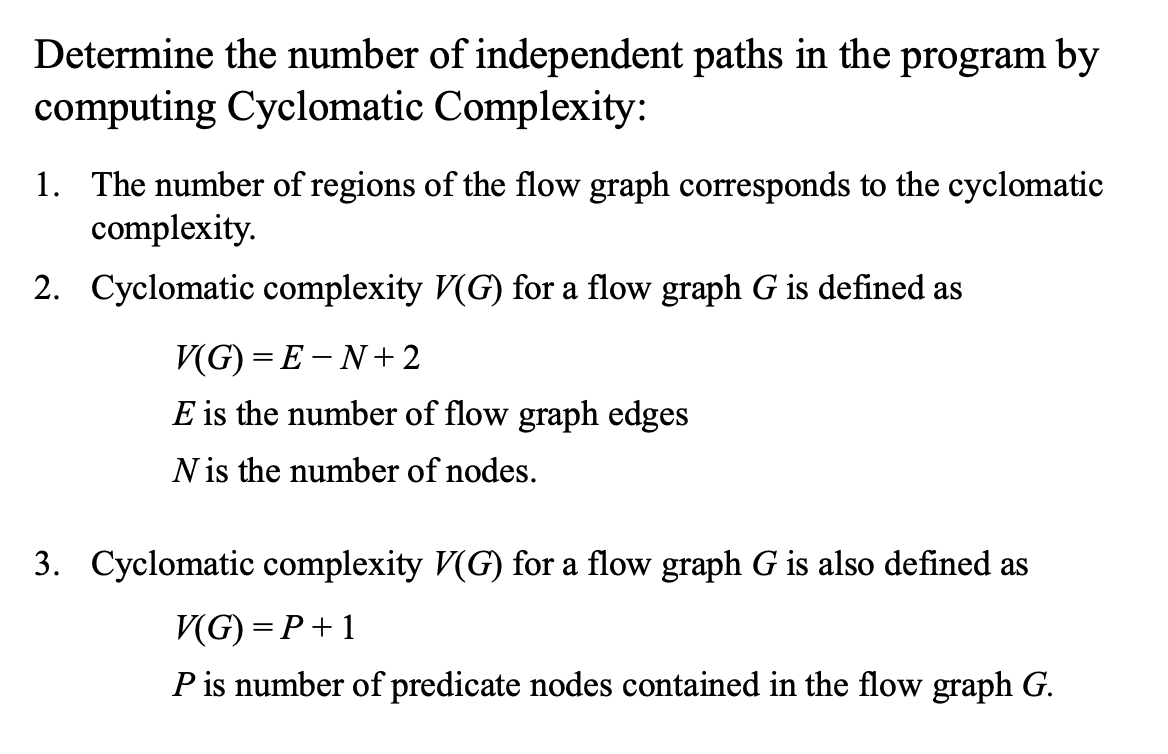

19.4.1 Basis Path Testing

Basis path testing is a white-box testing technique used to derive a logical complexity measure of a procedural design and define a basis set of execution paths for deriving test cases that exercise every statement in the program at least once during testing. This method requires the use of a flow graph to represent the control flow of the program, which allows for easier tracing of program paths. The flow graph consists of nodes representing procedural statements and edges representing flow of control. The regions bounded by edges and nodes are counted to derive a measure of the logical complexity of the program.

The concept of independent path is defined as any path through the program that introduces at least one new set of processing statements or a new condition. The number of independent paths can be determined by calculating the cyclomatic complexity, which is a software metric that provides a quantitative measure of the logical complexity of a program. Cyclomatic complexity can be calculated in three ways: the number of regions of the flow graph, E-N+2, or P+1, where E is the number of flow graph edges, N is the number of flow graph nodes, and P is the number of predicate nodes contained in the flow graph. The value for cyclomatic complexity provides an upper bound for the number of independent paths that form the basis set and an upper bound on the number of tests that must be designed and executed to guarantee coverage of all program statements. The basis set is not unique and a number of different basis sets can be derived for a given procedural design. In the example given, the cyclomatic complexity of the flow graph is 4, and we would need to define at most four test cases to exercise each independent logic path. The equations for calculating cyclomatic complexity are: V (G) = E-N+2 and V (G) = P+1.

19.4.2 Control Structure Testing

This section discusses control structure testing techniques that complement basis path testing, which is effective but not sufficient in itself. The techniques include condition testing, data flow testing, and loop testing. Condition testing exercises logical conditions in a program module, while data flow testing selects test paths based on variable definitions and uses. Loop testing focuses on the validity of loop constructs and can be applied to simple and nested loops.

The content discusses testing strategies for simple and nested loops in software development. For simple loops, five tests can be applied, including skipping the loop, one pass, two passes, m passes, and n-1/n/n+1 passes. For nested loops, the number of possible tests grows as the level of nesting increases. To reduce the number of tests, a suggested approach is to start at the innermost loop, conduct simple loop tests while holding outer loops at their minimum iteration parameter values, and work outward until all loops have been tested.

19.5 Black-Box Testing

Black-box testing is a complementary approach to white-box testing that focuses on the functional requirements of software. It attempts to find errors in categories such as incorrect or missing functions, interface errors, and initialization and termination errors. Black-box testing is typically applied during later stages of testing and is designed to answer questions about functional validity, system behavior and performance, input values, data class boundaries, data rates and volume, and the effects of specific data combinations. The goal is to derive a set of test cases that reduce the number of additional test cases needed for reasonable testing and provide information about classes of errors rather than just specific test cases.

19.5.1 Interface Testing

Interface testing ensures that a program component accepts and returns information in the correct order and format. It is often a part of integration testing and is necessary to ensure that a component does not break when integrated into the overall program. Stubs and drivers are important for component testing and sometimes incorporate test cases or debugging code. Some agile developers prefer to conduct interface testing using a copy of the production version of the program with additional debugging code.

19.5.2 Equivalence Partitioning

Equivalence partitioning is a black-box testing method that divides the input domain into classes of data from which test cases can be derived. The goal is to create an ideal test case that uncovers a class of errors that might otherwise require many test cases to be executed. Equivalence classes are based on an evaluation of input conditions, such as specific numeric values, ranges of values, sets of related values, or Boolean conditions. Guidelines for deriving equivalence classes are provided, and test cases are selected to exercise the largest number of attributes of an equivalence class at once.

- If an input condition specifies a range, one valid and two invalid equivalence classes are defined.

- If an input condition requires a specific value, one valid and two invalid equivalence classes are defined.

- If an input condition specifies a member of a set, one valid and one invalid equivalence class are defined.

- If an input condition is Boolean, one valid and one invalid class are defined.

19.5.3 Boundary Value Analysis

Boundary value analysis (BVA) is a testing technique that focuses on the boundaries of the input and output domains of software components. It complements equivalence partitioning by selecting test cases at the “edges” of the equivalence classes. Guidelines for BVA include testing the minimum and maximum values, values just above and below, and prescribed boundaries of input and output conditions and data structures. By applying these guidelines, software engineers can perform more complete boundary testing, which increases the likelihood of detecting errors.

19.6 Object-Oriented Testing

In object-oriented software, encapsulation drives the definition of classes and objects. Each class and instance of a class packages attributes and operations that manipulate these data. Encapsulated class is usually the focus of unit testing, but operations within the class are the smallest testable units. In a class hierarchy, an operation may exist as part of a number of different classes, and it is necessary to test the operation in the context of each subclass. Testing a single operation in isolation is usually ineffective in the object-oriented context.

19.6.1 Class Testing

Class testing for object-oriented software is equivalent to unit testing for conventional software, but it focuses on the operations and state behavior of a class rather than the algorithmic detail and data flow across module interfaces. A banking application is used as an example, with an Account class having operations such as open(), setup(), deposit(), withdraw(), balance(), summarize(), creditLimit(), and close().

A minimum test sequence (open • setup • deposit • withdraw • close) of operations for an account is identified, but a wide variety of other behaviors (open • setup • deposit • [ deposit | withdraw | balance | summarize | creditLimit ]^n • withdraw • close) can occur within this sequence. Random order tests (Test case r 1 : open • setup • deposit • deposit • balance • summarize • withdraw • close , Test case r 2 :open • setup • deposit • withdraw • deposit • balance • creditLimit • withdraw • close) are conducted to exercise different class instance life histories, and test equivalence partitioning can reduce the number of required test cases.

19.6.2 Behavioral Testing

The state diagram for a class, which represents the dynamic behavior of the class, can be used to derive a sequence of tests for the class and its collaborators. An example of a state diagram for the Account class is provided in Figure 19.7, which shows transitions between states such as empty acct, setup acct, working acct, nonworking acct, and dead acct. The majority of behavior for instances of the class occurs in the working acct state, and final transitions occur when a withdrawal is made or the account is closed.

The content discusses the use of state-based testing to design test cases for classes in software development. It emphasizes the importance of achieving coverage of every state in the system and provides examples of test cases for an Account class and a CreditCard object. The breadth-first approach to testing is also explained, with the recommendation to test a single transition at a time and to only use previously tested transitions.

Test case S1 : open • setupAccnt • deposit(initial) • withdraw(final) • close

Adding additional test sequences to the minimum sequence,

Test case S2 : open • setupAccnt • deposit(initial) • deposit • balance • credit • withdraw(final) • close

Test case S3 : open • setupAccnt • deposit(initial) • deposit • withdraw • accntInfo • withdraw(final) • close

Leave a comment